Abstract

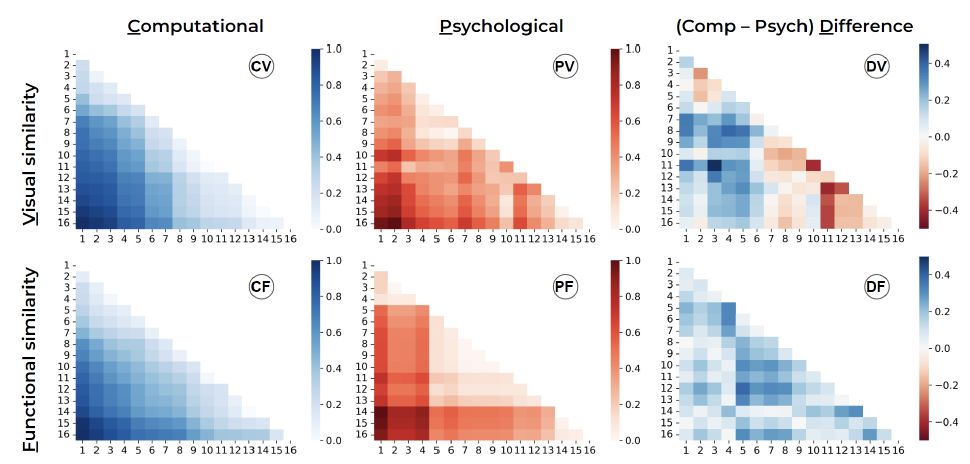

As inspirational stimuli can assist designers with achieving enhanced design outcomes, supporting the retrieval of impactful sources of inspiration is important. Existing methods facilitating this retrieval have relied mostly on semantic relationships, e.g., analogical distances. Increasingly, data-driven methods can be leveraged to represent diverse stimuli in terms of multi-modal information, enabling designers to access stimuli in terms of less explored, non-text-based relationships. Toward improved retrieval of multi-modal representations of inspirational stimuli, this work compares human-evaluated and computationally derived similarities between stimuli in terms of non-text-based visual and functional features. A human subjects study (n=36) was conducted where similarity assessments between triplets of 3D-model parts were collected and used to construct psychological embedding spaces. Distances between unique part embeddings were used to represent similarities in terms of visual and functional features. Obtained distances were compared with computed distances between embeddings of the same stimuli generated using artificial intelligence (AI)-based deep-learning approaches. When used to assess similarity in appearance and function, these representations were found to be largely consistent, with highest agreement found when assessing pairs of stimuli with low similarity. Alignment between models was otherwise lower when identifying the same pairs of stimuli with higher levels of similarity. Importantly, qualitative data also revealed insights regarding how humans made similarity assessments, including more abstract information not captured using AI-based approaches. Toward providing inspiration to designers that considers design problems, ideas, and solutions in terms of non-text-based relationships, further exploration of how these relationships are represented and evaluated is encouraged.