Abstract

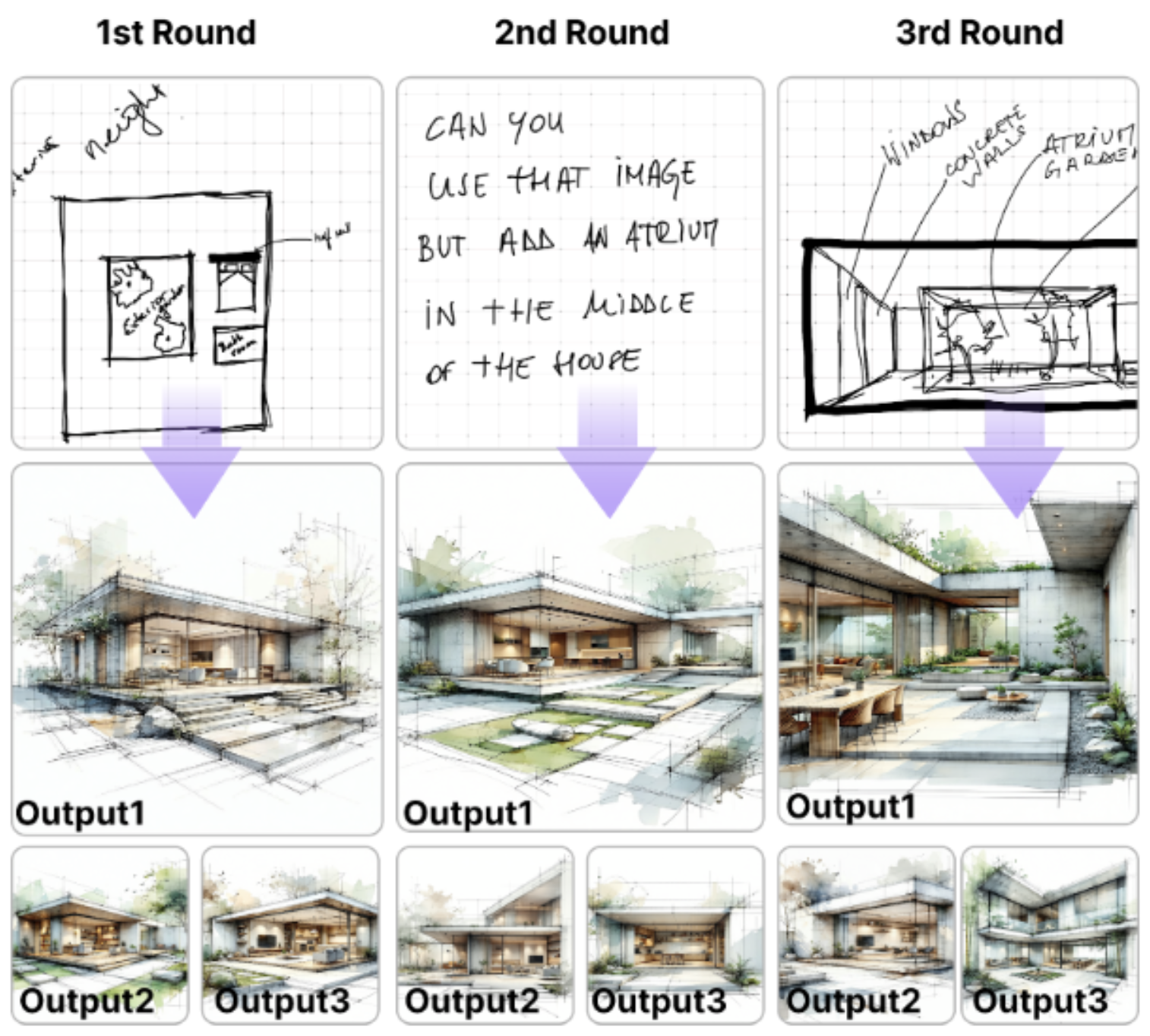

The process of generating ideas during co-design with a Generative AI (GenAI) system requires the gradual calibration of trust in that system. Trust plays a pivotal role in shaping human interactions with technology, and developing well-calibrated trust is essential for the effective use and integration of GenAI. Proper trust calibration helps prevent underutilization of the system’s capabilities and dissatisfaction with its output. For engineers and system designers, trust is particularly important as it directly influences user responses, system adoption, and overall engagement with new technologies. To explore the factors that influence trust fluctuation when co-designing with a GenAI system, we analyzed 12 hours of conceptual human-AI co-design sessions using a custom GenAI system capable of producing images across various generation modes from convergent-divergent to abstract-concrete, and combining text and sketch prompting. Focusing on each moment of interaction with GenAI-generated images, we conducted an incremental and qualitative coding of each trust-related extract from think-aloud protocols. Through this approach, we identified 23 key factors that cause fluctuations in trust. Our findings reveal a complex network of factors that impact trust calibration, offering insights into how GenAI systems can be designed to facilitate faster and more effective trust-building in human-GenAI collaborations.